Pyspark Range Between Example . Creates a windowspec with the. we can use rangebetween to include particular range of values on a given column. i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. Let us start spark context for this. Int) → pyspark.sql.window.windowspec [source] ¶. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single.

from www.vipmind.me

i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. we can use rangebetween to include particular range of values on a given column. Let us start spark context for this. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. Int) → pyspark.sql.window.windowspec [source] ¶. Creates a windowspec with the.

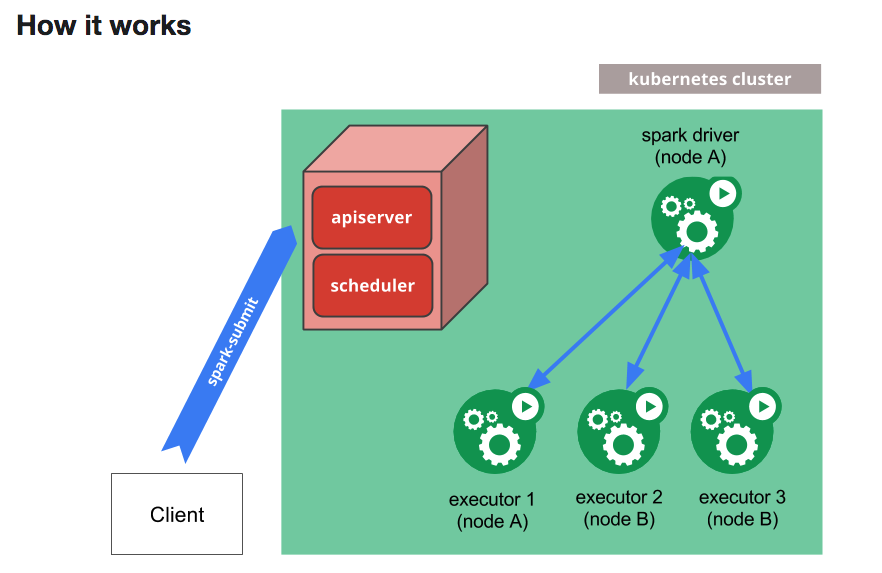

Mind Deploy pySpark jobs into with python dependencies

Pyspark Range Between Example i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. Let us start spark context for this. we can use rangebetween to include particular range of values on a given column. i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. Creates a windowspec with the. Int) → pyspark.sql.window.windowspec [source] ¶. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark.

From dvpjcjxaeco.blob.core.windows.net

Airflow Pyspark Example at Sharon Burgess blog Pyspark Range Between Example Int) → pyspark.sql.window.windowspec [source] ¶. we can use rangebetween to include particular range of values on a given column. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. . Pyspark Range Between Example.

From www.vipmind.me

Mind Deploy pySpark jobs into with python dependencies Pyspark Range Between Example to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. Let us start spark context for this. i have a spark sql dataframe with date column, and what i'm trying. Pyspark Range Between Example.

From www.deeplearningnerds.com

PySpark Aggregate Functions Pyspark Range Between Example Let us start spark context for this. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. i have a spark sql dataframe with date column, and what i'm trying. Pyspark Range Between Example.

From www.educba.com

PySpark vs Python Top 8 Differences You Should Know Pyspark Range Between Example the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. we can use rangebetween to include particular range of values on a given column. Let us start spark context for this. Creates a windowspec with the. to handle date ranges effectively, we can partition the data by a specific column (like an id). Pyspark Range Between Example.

From github.com

pysparkexamples/pysparkexplodenestedarray.py at master · spark Pyspark Range Between Example Let us start spark context for this. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. we can use rangebetween to include particular range of values on a given column. Int) → pyspark.sql.window.windowspec [source] ¶.. Pyspark Range Between Example.

From www.youtube.com

Pandas vs Pyspark Comparison on datasets greater than 10 Gb YouTube Pyspark Range Between Example Let us start spark context for this. Creates a windowspec with the. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. we can use rangebetween to include particular range of values on a given column. to handle date ranges effectively, we can partition the data by a specific column. Pyspark Range Between Example.

From www.machinelearningplus.com

PySpark Archives Page 3 of 3 Machine Learning Plus Pyspark Range Between Example i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. Creates a windowspec with the. Let us start spark context for this. we can use rangebetween to include particular. Pyspark Range Between Example.

From www.linkedin.com

Comparison SQL vs Pandas vs PySpark Pyspark Range Between Example Int) → pyspark.sql.window.windowspec [source] ¶. we can use rangebetween to include particular range of values on a given column. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. Creates. Pyspark Range Between Example.

From towardsdev.com

PySpark A Comprehensive Guide For DataFrames(Part1) by Sukesh Pyspark Range Between Example i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. Creates a windowspec with the. we can use rangebetween to include particular range of values on. Pyspark Range Between Example.

From sparkbyexamples.com

PySpark between() Example Spark By {Examples} Pyspark Range Between Example Creates a windowspec with the. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. Let us start spark context for this. we can use rangebetween to include particular range of values on. Pyspark Range Between Example.

From medium.com

Get Started with PySpark and Jupyter Notebook in 3 Minutes Pyspark Range Between Example Let us start spark context for this. i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. we can use rangebetween to include particular range of values on a given column. Creates a windowspec with the. the ‘between’ method in pyspark is a. Pyspark Range Between Example.

From zhuanlan.zhihu.com

PySpark RDD有几种类型算子? 知乎 Pyspark Range Between Example Creates a windowspec with the. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. Int) → pyspark.sql.window.windowspec [source] ¶. to handle date ranges effectively, we can partition the data by a specific column (like an. Pyspark Range Between Example.

From sparkbyexamples.com

PySpark SQL with Examples Spark By {Examples} Pyspark Range Between Example the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. we can use rangebetween to include particular range of values on a given column. Int) → pyspark.sql.window.windowspec [source] ¶. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. i have a spark sql dataframe. Pyspark Range Between Example.

From stackoverflow.com

pyspark Compare sum of values between two specific date ranges over Pyspark Range Between Example Let us start spark context for this. i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. to handle date ranges effectively, we can partition the data by a. Pyspark Range Between Example.

From www.codingninjas.com

PySpark Tutorial Coding Ninjas Pyspark Range Between Example to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. Creates a windowspec with the. Let us start spark context for this. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. i have a spark sql dataframe with date. Pyspark Range Between Example.

From sparkbyexamples.com

PySpark Count Distinct from DataFrame Spark By {Examples} Pyspark Range Between Example we can use rangebetween to include particular range of values on a given column. the ‘between’ method in pyspark is a convenient way to filter dataframe rows based on a single. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. i have a spark sql. Pyspark Range Between Example.

From www.youtube.com

rows between in spark range between in spark window function in Pyspark Range Between Example to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. Let us start spark context for this. Int) → pyspark.sql.window.windowspec [source] ¶. i have a spark sql dataframe with date column, and what i'm trying to get is all the rows preceding current row in a. we. Pyspark Range Between Example.

From www.programmingfunda.com

PySpark Sort Function with Examples » Programming Funda Pyspark Range Between Example the pyspark.sql.window.rangebetween method is a powerful tool for defining window frames within apache spark. to handle date ranges effectively, we can partition the data by a specific column (like an id) and then order. Let us start spark context for this. Int) → pyspark.sql.window.windowspec [source] ¶. Creates a windowspec with the. the ‘between’ method in pyspark is. Pyspark Range Between Example.